Blog

Microsoft is porting SQL Server to Linux

- Greg Duffy

- Tue, Mar 8, 2016

Something I thought I’d never see !. An article on ZDNet discussing Microsoft’s plans to make available a private preview of SQL Server for Linux, with plans to make the product generally available by mid-2017.

This is interesting given the original heritage of Microsoft SQL Server and it’s relationship to Sybase which was at the time primarily a Unix based SQL server database system.

The following extract from Wikipedia described it’s origins.

In 1988 Microsoft joined Ashton-Tate and Sybase to create a variant of Sybase SQL Server for IBM OS/2 (then developed jointly with Microsoft), which was released the following year.[5] This was the first version of Microsoft SQL Server, and served as Microsoft’s entry to the enterprise-level database market, competing against Oracle, IBM, and later, Sybase. SQL Server 4.2 was shipped in 1992, bundled with OS/2 version 1.3, followed by version 4.21 for Windows NT, released alongside Windows NT 3.1. SQL Server 6.0 was the first version designed for NT, and did not include any direction from Sybase.

Interesting times indeed in the Microsoft world.

Rio Tinto scales up its big data ambitions

- Greg Duffy

- Mon, Feb 8, 2016

Great story on ITNews today on how Mining Giant Rio Tinto is using technology and big data to assist in mining exploration. Rio has massive scale data requirements and storing and analysing this data is not possibly without using big data methodologies. Use of cloud based storage infrastructure such as Google, Amazon and Azure is giving Rio the edge.

As an example, Rio’s 900 Haul Trucks generate almost 5TB of data each day providing the information to defer hundreds of thousands of dollars in maintenance costs.

Rio calls it the "Mine of the Future" program and in addition to cloud based storage Rio has a centre in Brisbane called the Processing Centre of Excellence (PEC), which uses big data analytics to process this data.

The company is also one of the biggest users of autonomous technology in the resources sector, including driver-less trucks and unmanned aerial systems.

SmartOS - The Complete Modern Operating System

- Greg Duffy

- Mon, Feb 8, 2016

SmartOS is a free and open-source SVR4 hypervisor, based on the UNIX operating system that combines OpenSolaris technology with Linux’s KVM virtualisation. Its core kernel contributed to illumos project.

It features several technologies: Crossbow, DTrace, KVM, ZFS, and Zones. Unlike other illumos distributions, SmartOS employs NetBSD pkgsrc package management.

SmartOS is designed to be particularly suitable for building clouds and generating appliances. It is developed for and by Joyent, but is open-source and free to use by anyone.

SmartOS is an in-memory operating system and boots directly into random access memory. It supports various boot mechanisms such as booting off of USB thumb-drive, ISO Image, or over the network via PXE boot. One of the many benefits of employing this boot mechanism is that operating system upgrades are trivial, simply requiring a reboot off of a newer SmartOS image version.

Lessons from over 30 years in IT

- Greg Duffy

- Thu, Feb 4, 2016

I’ve been working in the technology industry for over 30 years now. I’ve learned a lot. I wanted to share some of the lessons I’ve learned over the past 30 years.

Following are some tips gleaned from around the internet that I’ve learned to respect, and try to apply, over the years.

This list is pretty applicable to anyone, not just IT types.

The Internet of Things (IoT)

- Greg Duffy

- Tue, Nov 3, 2015

The Internet of Things (IoT) is the network of physical objects and devices, vehicles, buildings and other items which are embedded with electronics, software, sensors, and network connectivity, which enables these objects to collect and exchange data.

The Internet of Things allows objects to be sensed and controlled remotely across existing network infrastructure, creating opportunities for more direct integration of the physical world into computer-based systems, and resulting in improved efficiency, accuracy and economic benefit.

When IoT is augmented with sensors and actuators, the technology becomes an instance of the more general class of cyber-physical systems, which also encompasses technologies such as smart grids, smart homes, intelligent transportation and smart cities. Each thing is uniquely identifiable through its embedded computing system but is able to interoperate within the existing Internet infrastructure. Experts estimate that the IoT will consist of almost 50 billion objects by 2020.

Big Data - Hadoop, MongoDB, ElasticSearch

- Greg Duffy

- Sun, Aug 30, 2015

Big data is a broad term for data sets so large or complex that traditional data processing applications are inadequate. Challenges include analysis, capture, data curation, search, sharing, storage, transfer, visualization, querying and information privacy.

The term often refers simply to the use of predictive analytics or certain other advanced methods to extract value from data, and seldom to a particular size of data set. Accuracy in big data may lead to more confident decision making, and better decisions can result in greater operational efficiency, cost reduction and reduced risk.

Analysis of data sets can find new correlations to spot business trends, prevent diseases, combat crime and so on. Scientists, business executives, practitioners of medicine, advertising and governments alike regularly meet difficulties with large data sets in areas including Internet search, finance and business informatics. Scientists encounter limitations in e-Science work, including meteorology, genomics, connectomics, complex physics simulations, biology and environmental research.

The future of the Operating System - LinuxCon 2015 keynote

- Greg Duffy

- Fri, Jun 5, 2015

The following was given as a keynote at LinuxCon + CloudOpen Japan 2015.

There is also some great history in the linked slideshow and also a discussion of how things have evolved over the years to the point where we are at now where there is some serious disruption underway. Docker is also given great wraps as a way to move to the next evolution step in distributing and running applications.

To quote: "Linux has become the foundation for infrastructure everywhere as it defined application portability from the desktop to the phone and from to the data center to the cloud. As applications become increasingly distributed in nature, the Docker platform serves as the cornerstone of Linux’s evolution solidifying the dominance of Linux today and into tomorrow."

Read more at the spf13.com web site: http://spf13.com/presentation/the-future-of-the-os-linuxcon-2015-keynote/

Virtualization

- Greg Duffy

- Sat, May 2, 2015

In computing, virtualization refers to the act of creating a virtual (rather than actual) version of something, including virtual computer hardware platforms, operating systems, storage devices, and computer network resources.

Different types of hardware virtualization include:

-

Full virtualization – almost complete simulation of the actual hardware to allow software, which typically consists of a guest operating system, to run unmodified.

-

Partial virtualization – some but not all of the target environment attributes are simulated. As a result, some guest programs may need modifications to run in such virtual environments.

-

Paravirtualization – a hardware environment is not simulated; however, the guest programs are executed in their own isolated domains, as if they are running on a separate system. Guest programs need to be specifically modified to run in this environment.

In addition to the traditional Virtualization platforms such as VMware, HyperV, Xen and KVM, we also have expertise in the latest craze, containerisation. This is best exemplified by Docker but also exists in a very compelling form on the SmartOS platform.

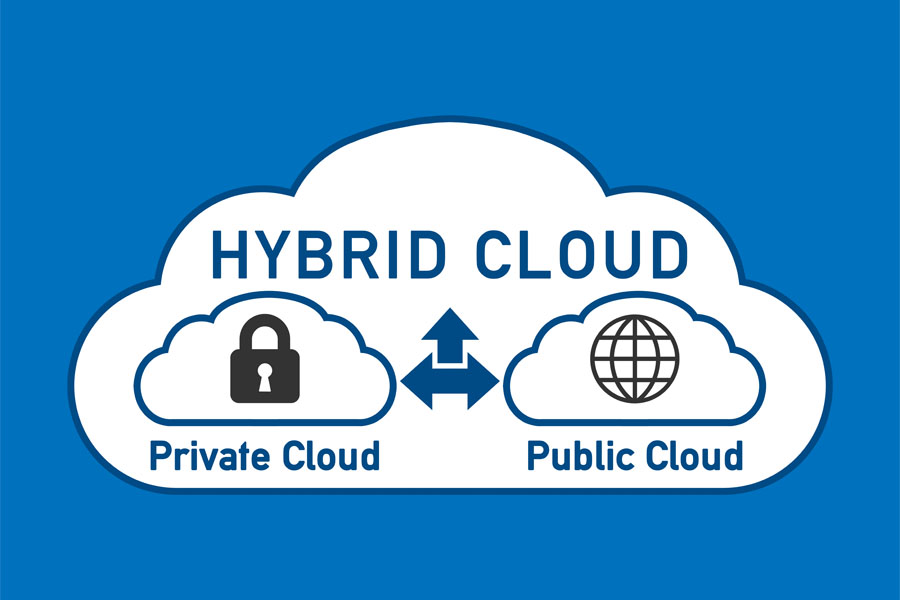

Hybrid Cloud - Public vs Private Data

- Greg Duffy

- Sun, Apr 5, 2015

A hybrid cloud is an integrated cloud service utilising both private and public clouds to perform distinct functions within the same organisation.

All cloud computing services should offer certain efficiencies to differing degrees but public cloud services are likely to be more cost efficient and scalable than private clouds.

Therefore, an organisation can maximise their efficiencies by employing public cloud services for all non-sensitive operations, only relying on a private cloud where they require it and ensuring that all of their platforms are seamlessly integrated.

title = "Queensland: Legacy IT bringing down infosec efforts" tags = ["security", "news"] image = "/img/security.jpeg" author = "Greg Duffy" profile = "/team/gregduffy" new = true +

Story on ITNews today on the state of the Queensland Governments effort in the Infosec space.

Queensland’s big problem, however, is legacy IT. It is arguably the nation’s capital for out-of-support software.

In his 2012 audit of the state’s IT environment, then-GCIO Peter Grant calculated 19 percent of all technologies were outside vendor support. At the time, only 54 percent of agencies had successfully migrated off Windows XP.

The state’s audit office said in 2014 that security remained the number one IT control concern it had for Queensland agencies.

In the 2013-14 year, security concerns made up 84 percent of all IT-related internal control issues identified, up from 64 percent in 2012-13.

Read more: http://www.itnews.com.au/blogentry/queensland-legacy-it-bringing-down-infosec-efforts-409178

Source: ITNews

© ByteBack Group 2015. Privacy Policy | Terms of Service